Things are obvious to us. We live on auto-pilot passing the same things everyday so we stopped wondering what they really are. We got accustomed to them and stop any reflection or everyday analysis.

"2+2=4" seems to be obvious. Can there be any surprise? Maybe in language which allows to override "+" operator someone could set a trap but it should not be anythings surprising here. Yet, actually there is a non trivial proof that 2+2 is actually 4. You can find it here -> A real proof that 2+2=4

Another situation and another statement. "There is a bug in my program" - this is very common situation so you most likely become used to the context of it and you will not redefine general concepts of "program" and "bug" each time - it's obvious like 2+2=4.

But if program is working without any exception is there a bug in it? If there is a bug but no one is able to spot it - can we say that there is a bug? Is a bug the same in theory and practice? To answer this questions we need to investigate concept of "program" and "bug" a little bit deeper...

Roots

The picture posted above is copied from wikipedia page called Human Computer. Yes... "computers" existed even before computers were invented.

Ha! So not only we need to define program but it is also not clear what is a computer. So now the sentence "I just bought a computer" may as well describe act of purchase of metal box or in the worst case act of slavery.

The part where they are described as "a person performing mathematical calculations" is very interesting. So "computer" was performing mathematical calculation - even those very simple like the following one :

fun isRectangularTriangle(a:Integer,b:Integer,c:Integer):Boolean =

pow(a,2)+pow(b,2) == pow(c,2)

So this "mathematical calculations" checks if

a^2 + b^2 = c^2or otherwise if triangle is rectangular. But can we be sure it is working that way? What if pow actually triggers just "bip" from computer speaker?

What would be extremely useful here is a "law" or "theorem" that for every possible argument we are sure that pow(x,2)=x*x . Of course now we need to define how works this sign "*" but let's assume this is standard multiplication - it is just an introduction to more interesting topics.

Can something go wrong?

If we look closely at our function of rectangular triangle we may think that obvious problems appear at the ends of int bounds. What is int range? "I know, I know in java doc it is 2 31" .Yes. This is an example of seeing world through single programming language lenses. Unfortunately many programmers stays for their whole life with their first commercial language and mistake its limitations with general programming rules. If you switch from Java to Haskell you will see that there can be no limits for an Integer:

Mathematical proof of reading from a file

While we theorize about rectangular triangle problem we need to take one step back to not miss a very interesting area. The question is : how a,b and c are actually provided . Maybe there is somewhere csv file with only one line?

3,4,5

Then maybe we can read those values with provided two functions

fun read(f:File):String fun parse(line:String):(a:Integer,b:Integer,c:Integer)

And then compose those three functions to have one solid mechanism which calculates answer according to provided data file:

read andThen parse andThen isRectangularTriangle

- Hooooooooooooooold on! And what in case there is an error?

- what error?

- file reading error

- what? .. how??

- like there is a no file or something

- so maybe... maybe... we could assume that it is always there ...?

The following problem - very practical - was described with possible solutions in the following abstract :

As it is stated on the screen shot - theoretical knowledge come across serious difficulties when you want to describe something which can break. Ho you can describe mathematically a function of reading file or executing query on the database?

solution to this problem was finally found by Eugenio Moggi and abstract above is taken from his work called Notions of computation and monads

Bug as a function

As a reminder : an example of Total Function is an addition because for every argument there is defined result. Contrary to this - division - is not defined for zero so it is Partial Function . When we have a method/function/whatever which claims that is able to divide any two numbers

divide(a:Double,b:Double) : DoubleThen we have an error for. For one specific value but still - an error. Other Examples :

- Function RegistrationForm => User is defined for every "proper" form but not defined for a form which has value "aaaaa" in age field.

- Function File=>String is defined for a set of file which actually exist under expected location

- Function OrderId=>Order is defined only for existing orders

- Function RequestToExternalAPI => RESPONSE is of course partial function defined for cases when we actually receive any response

Dear reader - I don't know if you came to definition of error from this perspective. During my "Java Period" I treated partial functions like total functions by cheating with exceptions. Sometimes we control exceptions by throwing them but sometimes exceptions control us by throwing themselves by surprise (maybe breaking 1000000 dollar purchase process). So beside most obvious definition that a bug is a situation when program returns incorrect result we can add another one :

So something like this :

readGeometry:String=>Boolean = parseLine andThen isRectangularTriangle

Can be defined as a bug because parseLine function is defined only for a specially formatted line which is a subset of all possible text lines. This solution can even pass all tests if test data is very naive.

Compositions and Effects

The general problem with exceptions is that it is difficult or even impossible to compose functions when we are cheating with types. and we want to compose because composition is the Holy Grail of programming. How to explain it? when we have a function String=>(Int,Int) which promise us that it will return tuple (Int,Int) but in reality it can return this tuple or who knows which exception then we can not just compose this function with another one (Int,Int) => Boolean because I can not be sure that I will receive (int,int) at input.

OK - it is very easy to find flaws but is there any solution? Yes there is! Instead of pretending that partial function is total we can actually convert partial function into total function using type manipulation. And this is (most likely) what Eugenio Moggi proposed in his work ("most likely" because I'm unable to understand full of it)

So lets start from classical solution :

val divide:(Int,Int)=>Int = (a,b) => a/b try{ divide(4,0) }catch{ case e:ArithmeticException => logException }

It is important to understand here that try is an artificial glue here which connects reality to fake types. Lets reveal true nature of a function.

val partialDivide : PartialFunction[(Int,Int),Int] = { case (a,b) if b!=0 => a/b } println(partialDivide.isDefinedAt(4,0)) //false

Now the magic happens! - we change partial function into total function by introducing different category of types

val totalDivide: ((Int, Int)) => Option[Int] =partialDivide.lift println(totalDivide(4,0)) //None println(totalDivide(4,2)) //Some(2)

"totalDivide" is a total function and for every argument it has defined result. Where division has sense result will be Some(number) but where it is not defined - zero - it is None. The question may appear - "ok, but how this differs from null?" . First of all we know the type of Option - it may be Option[Int] , Option[String] , Option[User] where null is just ... null. When we have defined set of type values Some and None we can transfrom those values with pattern matching and high order functions - first mechanism doesn't exists in Java and second is quite new - maybe that's why this approach is not so popular in the mainstream.

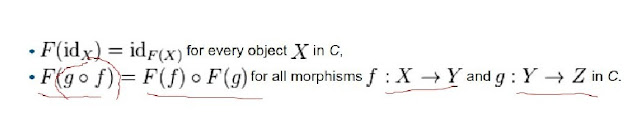

We talked a little bit about composition so how this totality can help here? There is whole theory describing how to compose those things - some popular words which can be known to you dear reader are Functor or Monad but in general you can call those "wrapper types" - Effects. So Option will be an effect of "lack of something" like result of division of zero, Future can be an effect of time in computation etc...

This Effect should follow some well defined laws which can be checked by tools like quickcheck or scalacheck - there is even ready to use test template in scalaz

If our effect follow those laws we can easily compose them with pure total functions like in this example

Option(x).map(f).map(g) is equal to Option(x).map(f andThen g)

Summary

Lets stop here. The topic is enormous and a lot of powerful Effects composition constructs like Monad Transfrormers, Kleisli or Foldable waits around the corner but lets stop here. We started this article with thesis that everyday we work on auto-pilot mode and we don't have time or energy to contemplate things we do everyday. So instead diving into details of machinery stay on more abstract level and compare your usual approach to writing programs with philosophy of programming descibed in this article. Maybe something magical will happen...